If you ever find yourself lost in the streets, Google Maps is always there to save you. It is perhaps the most widely used app by Google. Well, guess who seems to have lost direction this time around?

Earlier this morning, a Twitter user, going by the username @AHappyChipmunk stumbled upon a search query that left the Twitterati stunned.

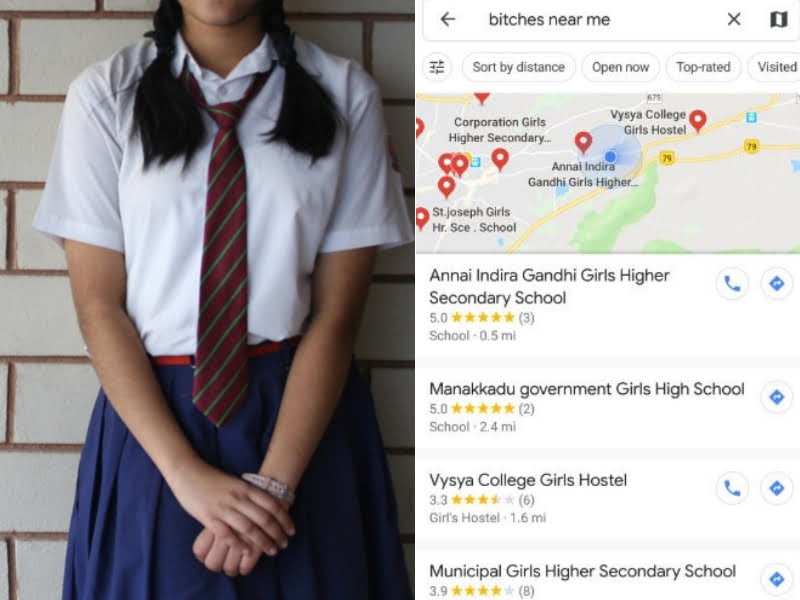

Google, can you explain why this fuckin exists pic.twitter.com/w0eslFoVSz

— scholar avalakki (@kirkiriputti) November 25, 2018

If you search for “bit*hes near me” on Google Maps, the search result will show addresses of girls’ schools, colleges, hostels and PGs (paying guest) in your vicinity.

The search result is outright derogatory and has understandably left the netizens furious. It makes women and young girls vulnerable, as their addresses are just out there in the public domain.

Not All Of It Is Google’s Fault

Now before you lose your sh*t and start to boycott Google, giving Google Maps 1 star and bad reviews on the Play Store (we know you do it), let me get this straight – it is not ALL Google’s fault. And no, before you demand someone’s head, it isn’t one particular employee’s fault either.

It is Google’s fault to the extent that the search algorithm that Google uses works this way. The algorithm seems to correlate bitches to girls.

But it is just as much our (as in, the users) fault as well. After all, Google’s search algorithm is based on artificial intelligence (AI) and it works by machine learning – it learns and improves itself as users use it. At the end of the day, AI is a reflection of us.

Also Read: Shocking Number Of Indians Propose To Google Assistant’s Voice & Even Sexually Harass Her

Tech Expert Explains What May Have Happened

Prasanto K Roy, a tech journalist, explained what may have happened in a Twitter thread.

+ So Google AI/ML system learns that "bitches near me" is a search for girls schools and girls hostels. AI/ML is doing what it's supposed to – learn from human behavior, however good or bad. AI/ML needs oversight, moderation as it develops.

— PKR | প্রশান্ত | پرشانتو (@prasanto) November 26, 2018

Let’s understand this with an example. You search for “bit*hes near me” on Google and after, say, 10 pages, you click on girls’ hostels or PGs.

With more and more people doing the same, Google’s algorithm will realize that people looking for “bit*hes” often click on girls’ hostels and PGs, and so it’ll start to show those addresses first.

Now it wouldn’t come off as a big surprise that people in India might actually do that. If one is searching for “bit*ches” near him, he might as well be interested in girls’ hostels or PGs and this is exactly where we are going wrong.

Here’s What You Can Do Now

Ever since the tweet went viral, a lot of users are posting screenshots of their own search results, without realizing that they are also revealing their own location while doing that. This may leave them vulnerable, and I’d thus suggest you not to do that.

So, what can you as a user do? You can report the issue by sending feedback via Google Maps. That’s the fastest and most efficient way of getting the issue resolved.

Oh, and as aptly pointed out by NewsBytes, if you search for “dogs near me” on Google Maps, it shows pet houses and animal grooming parlors.

Says a lot about the deeply-rooted problem in our mindsets, doesn’t it?

Image Credits: Google Images and Twitter

Sources: Beebom, NewsBytes

Find the blogger at @manas_ED

You’d also like to read: