The death of 25-year-old Suchir Balaji has shaken up the world especially after it came just weeks of an explosive profile by The New York Times where he spoke at length about his time at OpenAI and the harmful effects of artificial intelligence he saw.

The Indian-American researcher who formerly worked at OpenAI was found dead in his San Francisco apartment on November 26 as per reports. While the police have claimed that he died by suicide, however, the timing of it raises certain questions.

Balaji in a profile by the NYT had spoken about his former company and alleged that they were violating US copyright laws during the development of the ChatGPT chatbot along with also several other dark truths.

What Did Suchir Balaji Reveal?

Copyright Issues

According to NYT, Balaji spoke about how he, initially, did not know about the copyright issues with OpenAI and believed that the company was “free to use any internet data, whether it was copyrighted or not.”

It was only after the release of ChatGPT in 2022 that he began to have second thoughts about what the company was doing and how it was doing things. According to NYT, he believed that “OpenAI’s use of copyrighted data violated the law and that technologies like ChatGPT were damaging the internet.”

In his interview with The New York Times he said, “If you believe what I believe, you have to just leave the company.”

Data Training

The 25-year-old from California had been working with OpenAI for almost four years after graduating from the University of California Berkeley in 2021 with a degree in Computer Science.

A year after joining he started working on the GPT-4 project and among his duties was to collect vast amounts of digital data for analysis.

However, it was during this time that he started to question things since the GPT-4 project was initially not expected to “compete with existing internet services”.

He’d thought it would be somewhat similar to GPT-3 which wasn’t a chatbot but instead “a technology that allowed businesses and computer coders to build other software apps.”

He further claimed that not just ChatGPT but other such AI generative chatbots are also “destroying the commercial viability of the individuals, businesses and internet services that created the digital data used to train these A.I. systems.”

AI Darker Than Thought?

Balaji became interested in technology after coming across Deepmind’s technologies, especially the neural network. Speaking about AI Balaji said, “I thought that A.I. was a thing that could be used to solve unsolvable problems, like curing diseases and stopping ageing,” and added, “I thought we could invent some kind of scientist that could help solve them.”

Balaji joined a group of graduate students from Berkely in 2020 who began working for OpenAI and then during the development of GPT-4, he started gathering digital data which was “a neural network that spent months analyzing practically all the English language text on the internet” as per New York Times.

Balaji and his colleagues didn’t really have an idea that this would be released to the public and instead treated it as a research project since “with a research project, you can, generally speaking, train on any data… That was the mindset at the time.”

Read More: Is It Time To Be Afraid Of AI?

ChatGPT Not Meeting The 4 Criteria Of The “Fair Use” Doctrine

In a 23 October post on his blog (https://suchir.net/fair_use.html), Balaji also discussed the thin line between AI training and fair use, titled “When does generative AI qualify for fair use?”

Speaking with NYT Balaji said, “The outputs aren’t exact copies of the inputs, but they are also not fundamentally novel.”

He wrote about the training data that ChatGPT used to create its vast system and among them he stated, “Model developers like OpenAI and Google have also signed many data licensing agreements to train their models on copyrighted data: for example with Stack Overflow, Reddit, The Associated Press, News Corp, etc.

It’s unclear why these agreements would be signed if training on this data was “fair use”, but that’s beside the point. Given the existence of a data licensing market, training on copyrighted data without a similar licensing agreement is also a type of market harm, because it deprives the copyright holder of a source of revenue.”

He concluded his post by writing, “None of the four factors seems to weigh in favour of ChatGPT being a fair use of its training data. That being said, none of the arguments here are fundamentally specific to ChatGPT either, and similar arguments could be made for many generative AI products in a wide variety of domains.”

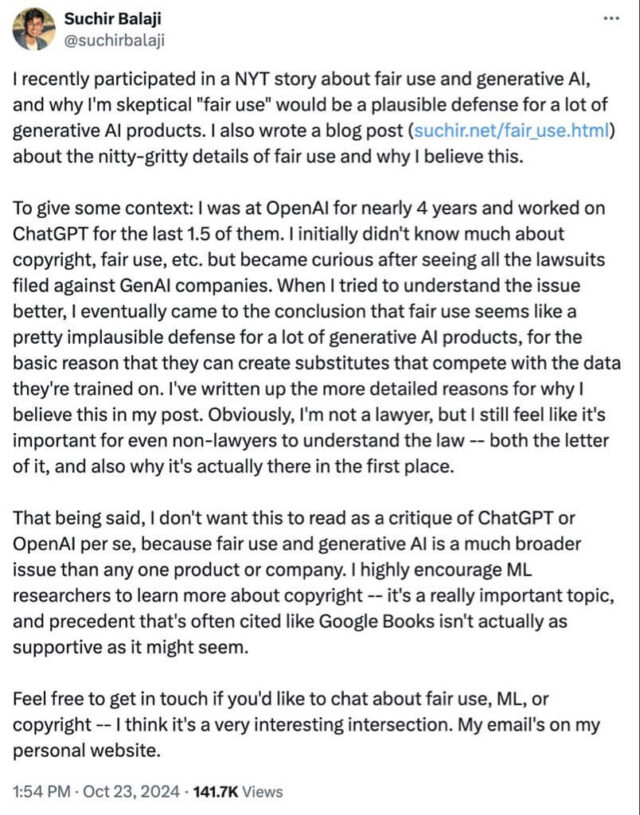

Balaji’s last post on X/Twitter on 24 October 2024 also discussed his profile on NYT and how the whole fair use argument for generative AI is quite flimsy. He also stated that there needs to be more clarity in that sector.

He wrote, “When I tried to understand the issue better, I eventually came to the conclusion that fair use seems like a pretty implausible defence for a lot of generative AI products, for the basic reason that they can create substitutes that compete with the data they’re trained on.

I’ve written up more detailed reasons for why I believe this in my post. Obviously, I’m not a lawyer, but I still feel like it’s important for even non-lawyers to understand the law — both the letter of it, and also why it’s actually there in the first place.”

Image Credits: Google Images

Sources: The Indian Express, The Hindu, The New York Times

Find the blogger: @chirali_08

This post is tagged under: Suchir Balaji, Suchir Balaji death, Suchir Balaji ai, Suchir Balaji open ai, Suchir Balaji openai truths, openai, openai truths, ai, artificial intelligence,

Disclaimer: We do not hold any right, or copyright over any of the images used, these have been taken from Google. In case of credits or removal, the owner may kindly mail us.

Other Recommendations:

Google’s AI Gemini Abuses Student; Pesters Him To ‘Please Die’