Imagine waking up to a deepfake video or photo of yourself that you never posed for, never consented to, in fact, never even knew of, getting circulated, digging at your reputation and chipping away at your sense of trust and safety.

This isn’t just any random scenario, but the cold reality of deepfakes: those digitally altered images and videos that use your identity, your face, and lead you to ruin in just a few seconds.

What Are Deepfakes?

Deepfakes are AI-generated images and videos that use a person’s identity in the form of facial features, voices and other elements of physical identity with the intention of causing fraud. These are created using deep learning technology to generate realistic fake media of a person or situation.

In an open letter by Yoshua Bengio, a computer science expert from Canada, and other experts of artificial intelligence and deep learning, it was quoted, “Today, deepfakes often involve sexual imagery, fraud, or political disinformation. Since AI is progressing rapidly and making deepfakes much easier to create, safeguards are needed.”

With the growing progress in technology, the manipulation of information in the form of deepfakes has become very common. Celebrities, politicians, public figures, and even the common man are falling prey to this loophole that technology has created.

According to a report by McAfee, the percentage of Indians who have fallen into the traps of deepfakes is almost 75%. About 44% of such cases were concerned with public figures, 37% of them influencing public trust in media, and a whopping 31% intervening in elections.

Pratim Mukherjee, a senior director at McAfee, said, “Recently, India has been witness to an unprecedented surge in cases of deepfake content involving public and private figures.

The ease with which AI can manipulate voices and visuals raises critical questions about the authenticity of content, particularly during a critical election year.”

One such example of a case involving a public figure happened back in 2024, when Bollywood actor Ranveer Singh became the victim of a deepfake. The video that surfaced showed the actor asking voters to vote for Congress and criticising the government.

Later, an FIR was filed under IPC sections 417, 468, and 469 that included crimes such as cheating, forgery for cheating and forgery for the purpose of harming reputation, respectively, as confirmed by officials.

The actor took to X (formerly Twitter) to warn his fans about the ill effects of deepfakes.

In 2024, actor Aamir Khan found himself in a similar situation after a deepfake video of him went viral, promoting a political party. The actor’s official spokesperson told the media, “We are alarmed by the recent viral video alleging that Aamir Khan is promoting a particular political party.

He would like to clarify that this is a fake video and is totally untrue. He has reported the matter to various authorities related to this issue, including filing an FIR with the Cyber Crime Cell of the Mumbai Police.”

As false information is created through the misuse of technology to defame a person or organisation, it not only harms their mental health but also violates the dignity and privacy of the person framed.

These deepfakes are not limited to public figures and their reputations; such manipulated videos and images can also be used to commit financial fraud and other heinous crimes against women and children.

Read More: Overwhelmed By Online Negativity? Bloomscrolling Is The Way Out

The Horror Of Deepfakes

The terror of deepfakes spreads beyond just financial loss. It’s an invasion of privacy, a non-consensual leak of information, a disruption of dignity and trust and most importantly, a crime.

Actresses and several women are often targeted for defamation and blackmail. Deepfaked content is used as a weapon against women, especially to create sexually explicit, derogatory content to blackmail and harass them in an attempt to shun women, thus leading to emotional trauma and abuse.

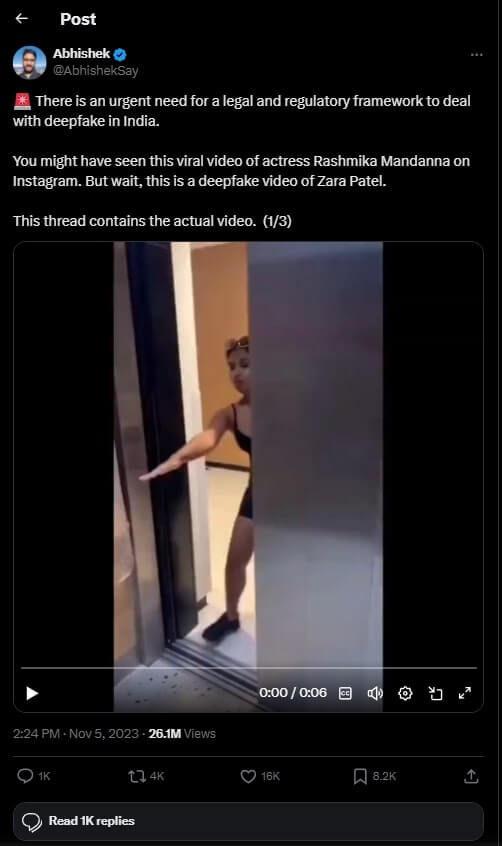

In 2023, actress Rashmika Mandana found herself in the shackles of deepfakes. A degrading deepfaked video of the actress went viral, after which several other derogatory posts and images began circulating. The actress later took to social media to speak up about the incident and express how she had been “really hurt”.

A recent example of a victim of deepfakes is the actress Girija Oak. Initially, the hype started around the simplicity with which the actress carried herself in an interview. However, this quickly escalated to morphed images and videos of the actress getting circulated.

The actress further revealed that her concern was the impact these images and videos would have on her personal life. “I have a 12-year-old son. He doesn’t use social media yet, but eventually he will… These obscene images of his mother are something he will see one day.”

She urged the viewers not to engage with such content, as enjoying such content was as bad as creating it.

A Final Appeal

A Deepfake isn’t just an example of misuse of technology; it is an attack on consent and dignity. Actors and public figures aren’t the only victims of this technological fraud. Even common people, especially young women, are made victims of such crimes.

Whether it’s an actor or a common person, the pain and fear that come with it are similar for all. Every time a person’s identity is misused, it breaks away a piece of will inside them and scars them for life. Clicking, watching and sharing such content makes us equally guilty as the offenders themselves.

How To Detect Deepfakes And Protect Yourself

Even though deepfakes seem very realistic, a few ways to detect them are:

- Unnatural facial movements: A deepfaked video lacks details, can’t keep up with the natural facial movements, and has bad lip-sync.

- Unusually clean audio: Usually, deepfaked content has an unusually clean audio that sounds delayed.

- Inconsistent lighting: An AI-generated media image often has inconsistent lighting, which is brighter around the face as compared to the rest of the body or background.

- Blurred and distorted images: A morphed image will have blurry or distorted edges.

To protect themselves from falling prey to such technological threats, a person should follow the following measures:

- Being cautious about what’s shared online: Personal information and media should be handled with caution.

- Not trusting suspicious sites and links: Always use trusted and secure sites, as a lot of information can be stolen using our digital footprints on suspicious sites.

- Watermarking uploaded media: When uploading anything online, it’s safer to watermark it to avoid it from getting stolen.

- Using strong passwords: A good way to block any offender from entering your social media handles is to set strong passwords and use appropriate privacy settings.

- Awareness and seeking help: As the world moves ahead with tech progress, it’s important to stay well-informed and seek professional help when required.

The reported crimes are just a few to name, as compared to several others that never make it to the police station. Being aware and spreading knowledge is a very important step towards this technological injustice because an invasion like this could threaten a person’s well-being and drive them towards taking a wrong step that could cost them their life.

Image Credits: Google Images

Sources: The Economic Times, The New Indian Express, The Times Of India

Find the blogger: @shubhangichoudhary_29

This post is tagged under: deepfakes, AI deepfake abuse, deepfake crimes, deepfake detection, privacy violation, identity theft, online harassment, cybercrime in India, AI misinformation, manipulated media, dangers of deepfakes, deepfakes in India, deepfake political misuse, deepfake impact on women, digital safety, rashmika mandana deepfake

Disclaimer: We do not hold any rights or copyright over any of the images used; these have been taken from Google. In case of credits or removal, the owner may kindly email us.

Other Recommendations:

Do You Also Make ChatGPT Edit Your WhatsApp Messages Before Sending?